Skirmishing about environmentalism may well continue forever, but the major war is over. It lasted far longer than most people realize.

-

February/March 2000

Volume51Issue1

One of this century’s profound cultural transformations began in the 1960s, when ecological thought took hold and fostered a new seriousness toward earth stewardship. But what happened then was really a transition. Present-day environmentalism represents an elaboration of core ideas developed far earlier by American conservationists, especially the seminal concepts and plans of the two Presidents Roosevelt and their allies. They prepared the way so that Americans later confronted by increasing threats to earth’s ecosystems could erect a sophisticated superstructure on ramparts already standing.

Movements that foster ideas that shape the fabric of American thought usually evolve in reaction to abuses that constrict the lives of citizens or threaten the nation’s future. The conservation movement came into existence in the first years of this century in response to the unprecedented plunder of public resources in the last three decades of the nineteenth century.

In the forefront of that pageant of destruction and waste was a rapacious lumber industry. Having begun in Maine and swept westward to California’s towering groves of redwood trees, the newly mechanized industry clear-cut the bulk of the country’s longleaf pine forests and left blackened wastelands in its wake.

Elsewhere, as the killing power of rifles increased, whole species were slaughtered on a scale the world had never seen. That decimation came to a climax on the Great Plains, where in the space of little more than a decade the vast herds of buffalo—the wildlife wonder of this continent—were nearly exterminated by “market hunters.” In other regions hunters who worked for commercial enterprises conducted relentless raids on edible birds, on fur seals, and on shore and migratory birds whose feathers were in demand. These endless hunts and those conducted for sport exterminated several species of bird and drove kingfishers, terns, eagles, pelicans, egrets, and herons to the brink of extinction.

The slaughters evoked angry protests from some Americans. In 1877, Secretary of the Interior Carl Schurz tried to start a campaign to halt the unfettered felling of the nation’s timberlands. A German emigrant familiar with the forestry practices of his homeland, Schurz issued a report in which he denounced lumbermen who were “not merely stealing trees, but whole forests.” But his plans to initiate scientific management of the nation’s resources were thwarted by Congress, and two decades would pass before growing public protest gave reformers an opportunity to push for laws and policies that would change the course of our history.

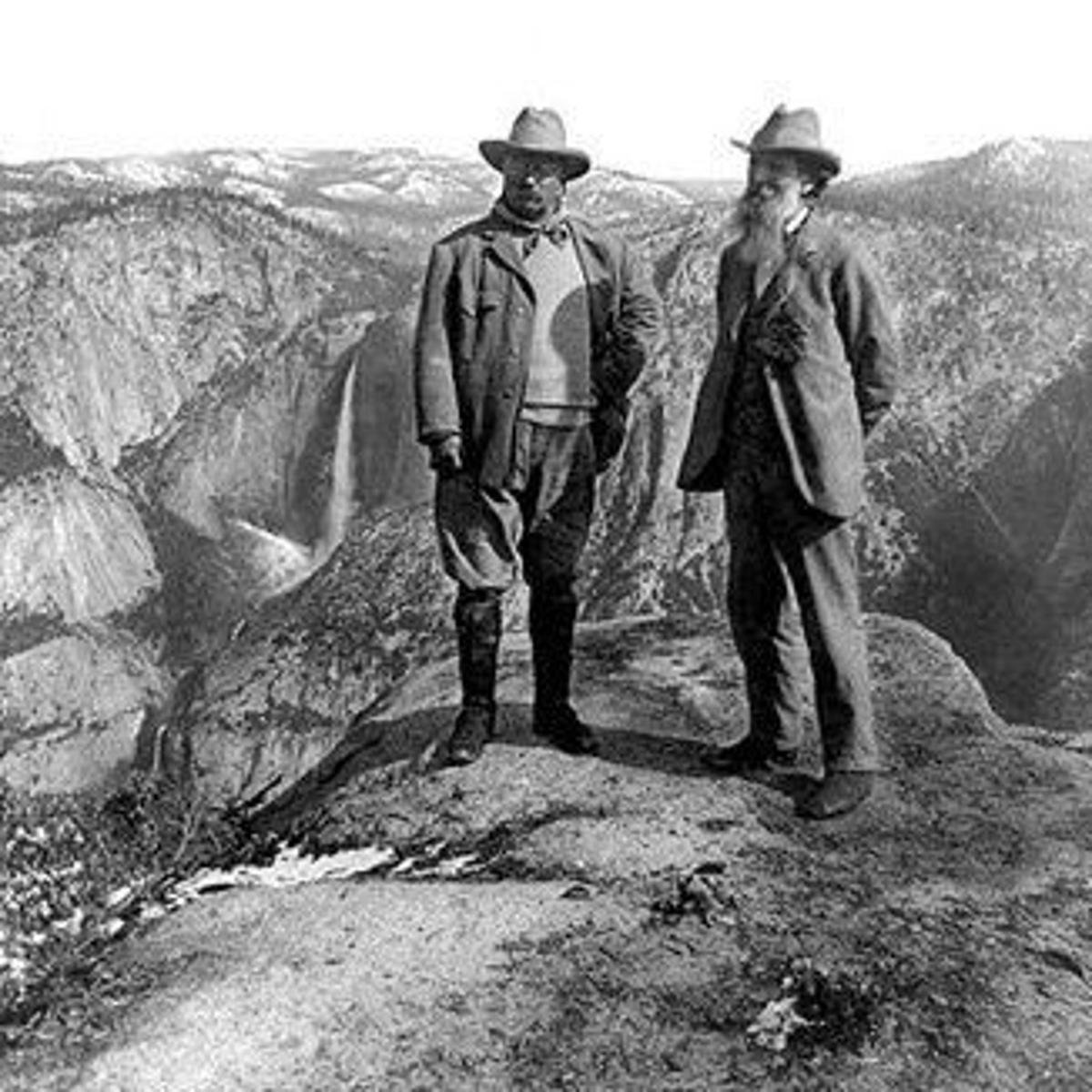

The man who became the leader of the nascent conservation movement was President Theodore Roosevelt. As a young rancher in what is now North Dakota, Roosevelt had learned what happened when nature’s iron laws were ignored. He was a natural-born reformer, and when an assassination catapulted him into the White House in 1901, he was ready to lead a crusade for land policies that would alter the values and attitudes of the American people.

The president began by declaring in his first State of the Union address that resource issues were “the most vital internal problems of the United States.” A politician who wore his convictions on his sleeve, he spoke out against “the tyranny of mere wealth” and galvanized a cadre of young foresters by exclaiming, “I hate a man who skins the land.”

Roosevelt chose for his chief adviser on resource issues the dynamic thirty-six-year-old chief of the Division of Forestry in the Department of Agriculture, Gifford Pinchot. Pinchot had little power as the head of a tiny new bureau, but his vigorous ideas about land stewardship won him a preferred place at the new President’s council table. Roosevelt’s crusade needed a motto, a slogan, and Pinchot and his friends soon coined a word that expressed the bundle of ideas the president was considering. Pinchot and his fellow forester Overton Price had been discussing the fact that government-owned forests in British India were called Conservancies, and this resonant word was enlarged into the nouns conservation and conservationist.

Roosevelt and Pinchot had to confront an unsympathetic Congress, and they knew from the outset that to do so they must sell conservation to the American people as well. Roosevelt welcomed this challenge, for he was a superlative teacher and saw himself as the trustee of the nation’s resources.

The policies and programs that Roosevelt and Pinchot implemented over the seven years of Roosevelt’s Presidency focused on specific issues. They converted idle forest “reserves” into a functioning system of national forests to be managed by a corps of trained foresters. The President won over hostile Western congressmen by supporting a new federal program to build dams and homestead-style irrigation projects in arid parts of the West. He also issued orders that stopped extravagant giveaways of public resources and simultaneously challenged a balky Congress to enact laws that hydropower sites and mineral resources be developed only under federal licenses and leases.

His audacity was what made many of Theodore Roosevelt’s landmark conservation achievements possible. In his second term he rewrote the rulebook on presidential power by placing his signature on sweeping Executive Orders and proclamations, rejecting his timid predecessors’ “narrowly legalistic view” that the President could function only where a statute told him to, and he plumbed the Constitution to find powers for himself. His glory was that he dared to use his pen to change the face of his country’s landscape.

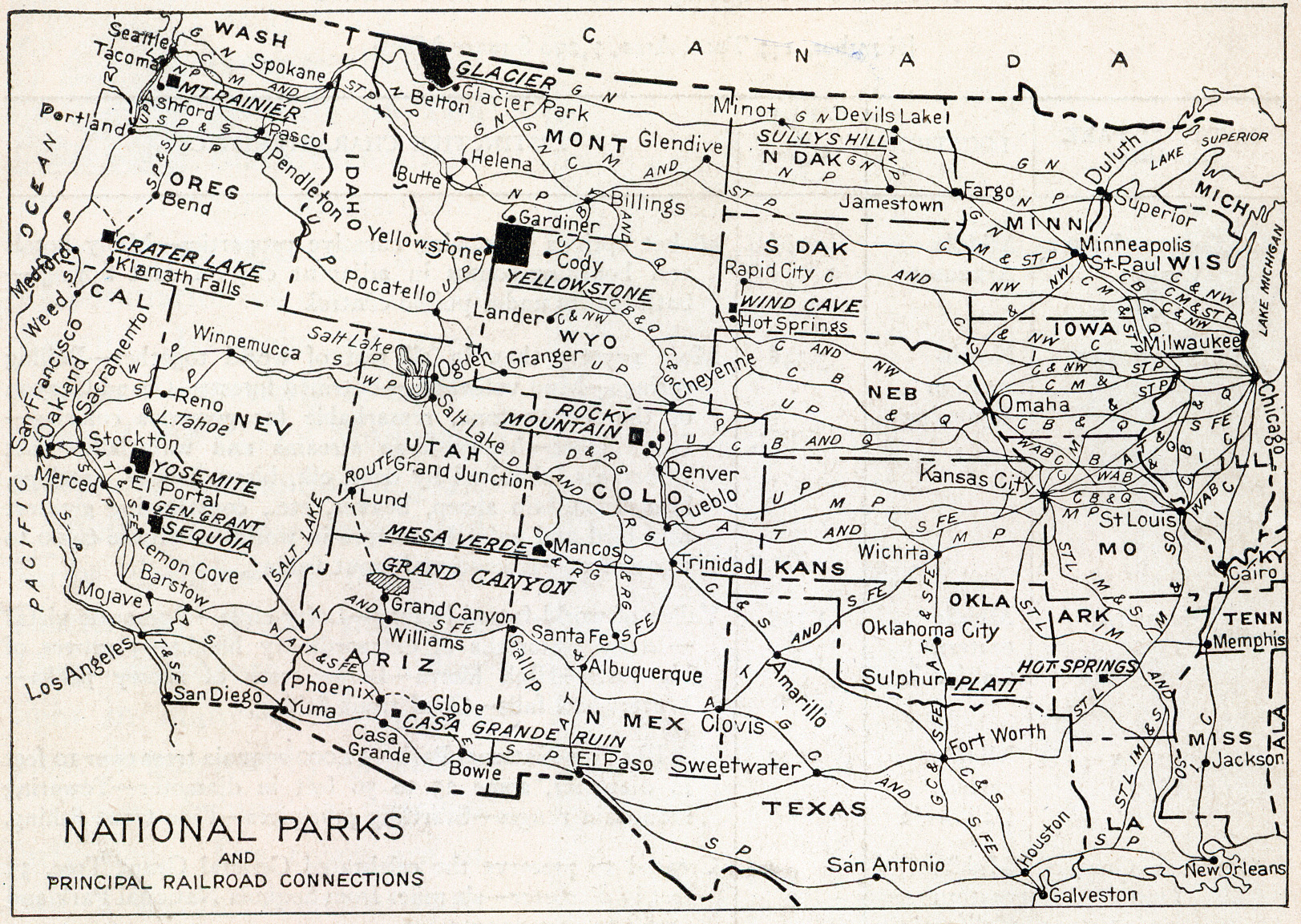

Before he left office, he had replaced a century-old policy of land disposal with a new policy of setting land aside for conservation. As a result of decisions he made, the lands designated as national forests increased from 42 million acres to 148 million, and 138 new forest areas were created in twenty-one Western states. With additional strokes of his pen, he carved out four huge wildlife refuges and set up fifty-one smaller sanctuaries for birds, to protect what he called “the beautiful and wonderful wild creatures whose existence was threatened by greed and wantonness.” With another flourish he established eighteen national monuments, including four—Grand Canyon, Olympic, Lassen Volcanic, and Petrified Forest—so majestic that Congress subsequently converted them into national parks.

Executive action was effective as far as it went, but it was essentially a policy to preserve some of the West’s unsullied lands. If resources damaged during the raider years of the nineteenth century were to be renewed and rehabilitated, there would have to be a truly national approach, with a working partnership between the executive and legislative branches of government. Theodore Roosevelt was a splendid preacher-at-large, but few members of Congress were stirred by his rhetoric. Indeed, in the decade after he left office only two significant conservation statutes were passed: the Weeks Act of 1911, which permitted the purchase of forested lands at the headwaters of navigable streams, to make possible national forests in the East, and the 1916 measure that created the National Park Service.

However, where conservation was concerned, Roosevelt’s influence did not wane after he left Washington; instead it came to a culmination during his third-party Bull Moose presidential campaign in 1912, when he forced his two opponents to compete with him as advocates of reform. Some of the men who were destined to lead the nation in the crisis years of the Great Depression—most notably Harold Ickes, George Norris, Sam Rayburn, and Franklin Delano Roosevelt—first lit their political torches at the bonfire he created in the 1912 presidential election.

His words and deeds left a spacious legacy. The conservation creed he espoused altered the outlook and the values of many Americans, encouraging citizens to form grassroots organizations and influence local and regional political decisions. And the ideals he championed not only changed his country’s land-stewardship practices but encouraged other nations to institute comparable programs.

Conservation fell out of favor during World War I and the 1920s. Existing national lands were better managed, but habitat for wildlife continued to shrink, wartime demands for wheat encouraged improvident plowing that would in time transform parts of the Great Plains into dust bowls, and little was done to restore the forestland gutted during the late nineteenth century.

The second wave of the conservation movement was launched when Franklin D. Roosevelt began his New Deal in the demoralizing depths of the Great Depression, when one of every four Americans was unemployed. Roosevelt’s experiences as governor of New York had suggested to him that providing conservation jobs for large numbers of young men would be an effective way to combat unemployment. In his acceptance speech at the 1932 Democratic National Convention, he put conservation in the forefront, announcing “a wide plan of converting many millions of acres of marginal and unused land into timberland through reforestation.”

The Civilian Conservation Corps (C.C.C.), created in the first weeks of his Presidency with nearly unanimous support from Congress, was probably the most effective of all New Deal programs. The jobs it generated provided dollars for destitute families and gave men valuable skills, and the work itself improved the economic outlook in nearby communities. More land-renewal work went on during Franklin Roosevelt’s first term than at any other time in the nation’s history. Corpsmen built small dams, tackled soil erosion problems, planted more than two billion trees, and built everything from washrooms to grand rustic lodges in national parks. To make the program truly national and provide more jobs, the President extended the East’s new system of national forests, allocating more than thirty-seven million dollars (appropriated by Congress for “public works”) to purchase eleven million acres of wounded, cut-over land. Before the war closed the camps, more than two and a half million young men served in the C.C.C.

Historians overlook the fact that, in certain regions, the New Deal was at its core a program of resource conservation. Congress, acting in tandem with the president, enthusiastically financed initiatives that ranged from a new Soil Conservation Service to the acquisition of millions of acres of swamps, lakes, and submarginal farmlands, enlarging the nation’s sanctuaries for migratory birds and wildlife.

The building of dams and hydroelectric plants was also a hallmark of the era. Construction of the world’s then-highest dam on the Colorado River (a huge federal project that moved ahead on schedule through the darkest years of the Depression) reflected the belief that floods should be controlled and the energy potential of the nation’s rivers “harnessed,” as the then-ubiquitous expression went. Dam building was ultimately carried to extremes, but the electricity dams generated fed a program that produced enormous benefits for tens of millions of Americans, the Rural Electrification Administration, which began in 1935.

At the time, nine-tenths of the thirty million people who lived in rural America did not have electric power. The REA law underwrote the formation of local electric cooperatives and provided low-interest loans to extend transmission lines into the countryside. In a few years, the program had raised the standard of living throughout the country and was furnishing the cheap energy for starting businesses and enabling small towns to grow.

Of necessity, the FDR administration fashioned its Crash programs piecemeal, responding to specific needs, but in so doing, it made conservation a mainstream concept and encouraged scientists allied with the movement to broaden their gaze and think holistically (the word had appeared just a decade earlier) about the earth’s resources. Those quiet conservation-minded scientists, among them the University of Wisconsin professor Aldo Leopold and a young woman named Rachel Carson, who worked in the Fish and Wildlife Service from 1936 through 1949, became important after the war, when atomic physicists and engineers rose as apostles of unlimited resources. The voices of the conservationists, and the challenging questions they asked, would gradually acquire authority when some of the miracles of Big Science turned out to threaten the ecosystems that sustained life on earth.

Today, it is hard to imagine how eagerly Americans in the 1950s accepted the “atoms for peace” thesis of inexhaustible dirt-cheap atomic energy. A vision of an atom-powered era of supertechnology, sketched initially by the physicist John Von Neumann, was elaborated in a 1957 book,

The American people embraced these visions partly because the awe and secrecy that enveloped nuclear research meant that at first few citizens had either the knowledge or the temerity to question them. And the optimism thus generated ultimately helped persuade our leaders that the United States could simultaneously go to the moon, feed the world’s hungry, carry out a program to modernize the economies of Latin America, and win a war in Southeast Asia. As the space program got under way, NASA’s rocket master, Wernher von Braun, put a capstone on these promises when he declared that the exploration of space was “the salvation of the human race.”

But, at the same time, ground-level evidence was mounting that the overall environment was deteriorating. In 1956 an atmospheric scientist measured the ingredients of the gathering pall over Los Angeles and chose the word smog to describe his baleful discovery. Meanwhile, daily flushings from industries and cities were turning the nation’s rivers into sewers. At one point in the mid-sixties, the mayor of Cleveland summed up a growing viewpoint when he predicted that the United States would soon become “the first nation to put a man on the moon while standing knee-deep in garbage.”

The first serious broad look at the impact of new technologies on the planet’s life-support system began in the United States in 1958. It was conducted by the marine biologist Rachel Carson. The ostensible subject of her four-year study was the effect on wildlife of the potent new poisons being produced by the chemical industry; in the end her research led her to compose a treatise that thrust the concept of ecology into the mainstream of human thought.

In 1958, some of Carson’s friends in Massachusetts and on Long Island, angry at local mosquito-control agencies drenching their neighborhoods with DDT, persuaded her to write a protest article about the environmental consequences. Her piece was rejected by Reader’s Digest, but Carson had become convinced this was an urgent issue and she decided to enlarge her piece into a short book, even though she doubted it could ever be a bestseller like her previous one,

During most of the four years that Carson took to complete

She achieved her first goal by presenting detailed accounts of spraying fiascoes in places that ranged from a Nova Scotia forest to the rice fields of California. This section of

As she had anticipated, chemical and agricultural trade groups mustered their scientists and mounted an expensive public relations campaign to discredit her credentials and her conclusions. Some critics asserted that she was not a “professional scientist”; a nutrition expert at Harvard’s Medical School castigated her for “abandoning scientific truth for exaggeration” and characterized her conclusions as “baloney”; the director of research for a leading manufacturer of pesticides put her down as a “fanatical defender of natural balance.”

There were other, cruder attacks: Ezra Taft Benson, who had been Secretary of Agriculture in the Eisenhower administration, wondered “why a spinster with no children was so concerned about genetics” and surmised that Carson was “probably a Communist.” However, President Kennedy was impressed with her presentation and had his Science Advisory Committee evaluate her findings. The dispute dissipated when, in April 1963, the prestigious committee submitted a report that vindicated her thesis.

Cancer claimed Rachel Carson’s life in the spring of 1964. She did not live long enough to be aware that

I was in charge of the Department of the Interior when

Only later, with hindsight, were many of us who had been caught up in the excitement of those times able to see them not as the dawn of a new way of looking at the world but rather as the final fruition of a conservation movement that had begun with the century. Indeed, the wise and always eloquent Aldo Leopold had provided a unifying theme decades earlier, when he wrote: “We abuse land because we regard it as a commodity belonging to us. When we see land as a community to which we belong, we may begin to use it with love and respect.”